Duane M. Tilden, P.Eng October 26, 2018

Is it possible that we can drastically reduce the existing fleet of power plants by 25% or more? Yes, this does seem to be a rather extravagant claim considering how many power providers or utilities such an increase in energy efficiency in output will impact. Examining the United States as our example:

As of December 31, 2017, there were about 8,652 power plants in the United States that have operational generators with a combined nameplate electricity generation capacity of at least 1 megawatt (MW). A power plant may have one or more generators, and some generators may use more than one type of fuel. (1)

So, reducing the existing fleet by 25% would enable us to decommission approximately 2,163 of these plants. This plan would require the examination of the total supply chain to optimize these reductions whilst maintaining the integrity of the existing distribution network. A significant project having enormous impact on the economy and meeting carbon reduction strategies on a global scale.

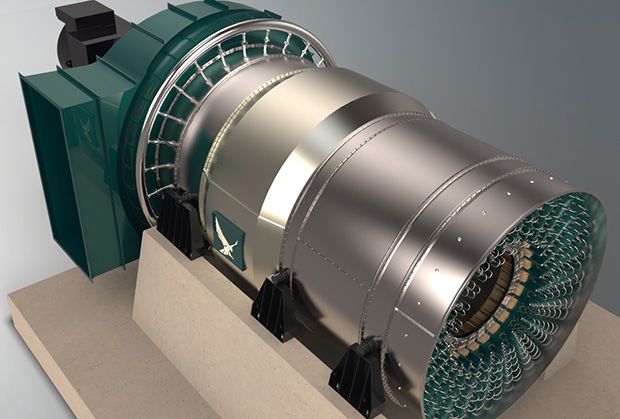

Supercritical Carbon Dioxide (SCCD) Turbines

In previous posts I have discussed the technology of SCCD turbines for power production and how this system can be used for a wide variety of power production and energy extraction methods. A recent article published by Euan Mearns with commentary delves even deeper into this technology to discuss the global impacts of increased power production efficiency on reducing carbon emissions.

GHG’s, carbon, NOx, pollution, waste heat, entropy effects, and consumption of resources are all commensurately reduced when we systematically increase power production energy efficiency at the plant level. An improvement of energy efficiency at the system level has a profound impact in output capacity or input reduction. For example, if we can increase the efficiency by 10% from 30% to 40% in conversion, the output of the plant is improved by 4/3 or 33% or inversely, the input requirement will reduce by 3/4 or 25%.

Power Plant Energy Efficiency

To measure the energy efficiency of a thermo-electric power plant we use the heat rate. Depending on the quality of the fuel and the systems installed we convert heat energy into electrical energy using steam generators or boilers. We convert water into steam to drive turbines which are coupled to generators which convert mechanical motion into electricity.

Examination of data provided will be simplified using statistical averages. In 2017 the average heat rates and conversion efficiencies for thermal-electric power plants in the US (2) are given as follows:

- Coal: 10465 Btu/Kw – 32.6%

- Petroleum: 10834 Btu/Kw – 31.5%

- Natural Gas: 7812 Btu/Kw – 43.7%

- Nuclear: 10459 Btu/Kw – 32.6%

Examination of the US EIA data for 2017 shows us that currently Natural gas is 11.1% more efficient than Coal in producing electricity while consuming 25.4% less fuel for the same energy output.

So we already have proof that at a plant level, energy efficiency gains in consumption are leveraged by smaller improvements in the thermodynamic cycle. For natural gas power plants the current state of the art is to use a combined cycle combustion process which is not employed in other thermo-electric power plants.

HOW A COMBINED-CYCLE POWER PLANT PRODUCES ELECTRICITY (3)

This is how a combined-cycle plant works to produce electricity and captures waste heat from the gas turbine to increase efficiency and electrical output.

-

Gas turbine burns fuel.

- The gas turbine compresses air and mixes it with fuel that is heated to a very high temperature. The hot air-fuel mixture moves through the gas turbine blades, making them spin.

- The fast-spinning turbine drives a generator that converts a portion of the spinning energy into electricity.

-

Heat recovery system captures exhaust.

- A Heat Recovery Steam Generator (HRSG) captures exhaust heat from the gas turbine that would otherwise escape through the exhaust stack.

- The HRSG creates steam from the gas turbine exhaust heat and delivers it to the steam turbine.

-

Steam turbine delivers additional electricity.

-

The steam turbine sends its energy to the generator drive shaft, where it is converted into additional electricity.

Figure 1. Schematic of Combined Cycle Gas/Steam Turbine Power Plant with Heat Recovery (4)

Comparing Combined Cycle Gas Turbines with SCCD Turbines

The study of thermodynamic cycles is generally a domain studied and designed by engineers and physicists who employ advanced math and physics skills. The turbine is based on the Brayton cycle, while steam turbines operate on the Rankine cycle. The Rankine cycle uses a working fluid such as water, which undergoes a phase change from water to steam. The Brayton cycle is based on a single phase working fluid, in this case combusted natural gas.

Both SCCD turbines and Gas Turbines operate on the Brayton cycle, however, they use different working fluids and requirements based on operating conditions. The gas fired turbine takes in air which is compressed by the inlet section of the turbine and natural gas is combined with the compressed air and ignited. The hot expanding gasses turn the turbine converting heat to mechanical energy. A jet engine operates on the Brayton cycle.

For a combined cycle gas turbine some of the waste heat is recovered by a heat exchange system in the flue stack, converted to steam to drive a second turbine to produce more electricity and increase the overall energy efficiency of the system.

In the case of an SCCD the turbines working fluid is maintained in a closed loop, continually being heated through a heat exchanger from a source and run in piping through the turbine and a compressor. Secondary heat exchangers for recuperation and cooling may be employed. These are all emerging technologies undergoing serious R&D by the US DOE in partnership with industry and others.

Figure 2. Closed Loop SCO2 Recompression Brayton Cycle Flow Diagram (NETL)

Technology Development for Supercritical Carbon Dioxide (SCO2) Based Power Cycles

The Advanced Turbines Program at NETL will conduct R&D for directly and indirectly heated supercritical carbon dioxide (CO2) based power cycles for fossil fuel applications. The focus will be on components for indirectly heated fossil fuel power cycles with turbine inlet temperature in the range of 1300 – 1400 ºF (700 – 760 ºC) and oxy-fuel combustion for directly heated supercritical CO2 based power cycles.

The supercritical carbon dioxide power cycle operates in a manner similar to other turbine cycles, but it uses CO2 as the working fluid in the turbomachinery. The cycle is operated above the critical point of CO2so that it does not change phases (from liquid to gas), but rather undergoes drastic density changes over small ranges of temperature and pressure. This allows a large amount of energy to be extracted at high temperature from equipment that is relatively small in size. SCO2 turbines will have a nominal gas path diameter an order of magnitude smaller than utility scale combustion turbines or steam turbines.

The cycle envisioned for the first fossil-based indirectly heated application is a non-condensing closed-loop Brayton cycle with heat addition and rejection on either side of the expander, like that in Figure 1. In this cycle, the CO2 is heated indirectly from a heat source through a heat exchanger, not unlike the way steam would be heated in a conventional boiler. Energy is extracted from the CO2 as it is expanded in the turbine. Remaining heat is extracted in one or more highly efficient heat recuperators to preheat the CO2 going back to the main heat source. These recuperators help increase the overall efficiency of the cycle by limiting heat rejection from the cycle. (4)

Commentary and Conclusion

We already are on the way to developing new systems that offer significant improvements to existing. Advancements in materials and technology, as well as other drivers including climate concerns and democratizing the energy supply. Every percentage of increase in performance reduces the consumption of fossil fuels, depletion of natural resources, generated waste products and potential impacts on climate.

SCCD systems offer a retrofit solution into existing power plants where these systems can be installed to replace existing steam turbines to reach energy efficiency levels of Combined Cycle Gas Turbines. This is a remarkable development in technology which can be enabled globally, in a very short time frame.

References:

- USEIA: How many power plants are there in the United States?

- USEIA: Average Operating Heat Rate for Selected Energy Sources

- GE: combined-cycle-power-plant-how-it-works

- https://www.netl.doe.gov/research/coal/energy-systems/turbines/supercritical-co2-turbomachinery